Kerr Avionics, LLC

KERR AVIONICS patented technology matches an encoded LED beacon on the ground (a slightly-modified LED runway or LZ light) with a video camera onboard an aircraft and so penetrates obscurants such as fog — virtually eliminating missed approaches. The FAA has dubbed this approach IQVR for Instrument Qualified Visual Range. IQVR (or Synchronous Detection) is essentially a Runway Visual Range for EVS (Enhanced Vision System) and video camera-equipped aircraft that enables the pilot/operator to see, and the Primary Flight Display or Head-Up Display to show. the landing zone 30% to 50% sooner than unaided human or Enhanced Vision System (EVS) sensor alone.

IQVR “turbo-charges” existing multispectral Enhanced Vision System sensors with what is essentially an image processing software download and a simple modification to LED lighting elements marking the aircraft runway/ touchdown zone or helicopter landing zone.

As such, it constitutes a mechanism for reducing air transport greenhouse gas emissions by significantly improving the efficiency of air traffic flow by reducing diversions, holding patterns, go-arounds, etc. caused by visibility-related airport closures. In other words, IQVR promises to virtually eliminate balked landings due to poor visibility.

For aircraft equipped with Enhanced Vision Systems, the software resides inline between the EVS Sensor pack and the CPU. For aircraft without EVS, a simple commercially available off-the-shelf video camera mounted inside the aircraft or on the Drone (UAS) will suffice.

Commercially Available off-the-shelf LED lighting elements, from handheld flashlights to Runway and Landing Zone identification lights become IQVR compatible with a simple “in-line” modification to the ground side of the power circuit.

WHY IT‘S NEEDED.

Kerr Avionics’ patented image processing system- incorporated into an aircraft’s electronic approach to landing flight guidance system- enables a 30% to 50% earlier acquisition of the landing environment, virtually eliminating missed approaches and diversions at airports equipped with LED/IQVR lighting.

On short final, IQVR can provide backup precision guidance to touchdown in the event GPS signal is lost due to equipment failure or jamming.

For Helicopter operations at night or in periods of poor visibility, the application of the IQVR enables pilots to:

- “Declutter” the sea of lights over large urban areas, leaving only the target landing zone visible.

- For Med Evac operations, locate and safely land at the accident scene.

- Offshore, locate and land on offshore platforms or ships at sea

- Reliable non-jam-able approach guidance back up to GPS and ILS.

For UAS (drone) operations, the proliferation of programmed LED lights- encoded for specific locations or persons- provides automatic specific location guidance. Programmed LED lights can also be operator set to deliver coded messages to search and rescue UAS or manned aircraft indicating urgent requirements for medical, food or other triage support.

HOW IT WORKS.

Kerr Avionics LLC has patented, field-proven, software-based technology to penetrate typically 25-50% beyond conventional Runway Visual Ranges in obscurants, using an economical video camera on board, and cooperative pulsing of the LED lights which are supplanting conventional runway and landing zone illuminators. This economical system utilizes new-generation, LED-based ground lighting, and airborne video camera technology in a configuration that significantly penetrates obscurants such as fog. The development, which initially involved an informal partnership with FAA scientists, permits a camera to “see” well beyond the capability of the human eye: it will acquire cooperative lights at extended ranges in day or night, clear or obscured (e.g., fog) conditions. In its basic implementation, it results in signal-to-noise (SNR) enhancements of several orders of magnitude, which conservatively translates to a range improvement, for example with respect to a Cat II RVR, of at least 50% (see Ref. 1).

(The large SNR improvement is required because fog causes an exponential fall-off of both target-light energy and scene contrast with range. The lower the visibility, the higher the percentage range advantage; this is because the range-squared falloff owing to the simple geometric spreading of the lights’ energy is less of a factor at shorter ranges. More detail is given in Ref. 1.)

An important bonus is that the lights may also carry coding (such as a runway or LZ identifier), which the system recognizes with high integrity (Ref. 2). All clutter, including a solar-lit background as well as extraneous area lighting in VFR conditions, is rejected. The system was first successfully field demonstrated through a Max-Viz sponsored program with an engineering team at Harvey Mudd College; this group was previously employed by the FAA as their experts to develop a prototype, LED-based high-intensity runway and approach lighting.

Once the lights or beacons are acquired by the camera system, they may be rendered into symbolic form and overlaid onto the thermal scene image of an EVS, or onto the displayed image from a simple, conventional video camera. Alternatively, this constitutes a powerful means of machine capture of a runway or LZ from its outline of lights including in DVE; applications then include the generation of a direct navigation signal for approach and landing, as described below.

1.1 Basic Principle.

Envision an LED that is pulsed at 30 times per second, and a camera whose frame rate is twice that or 60 frames per second. The camera “views” the instantaneous background scene including the target lights, and stores it in a “frame grabber”; then views the scene without the target lights, and subtracts this frame from the previous one. The result is that the background, including all “clutter” lights and features, is precisely subtracted-out, leaving only the targets. In the case of a solar lit fog, this greatly enhances the ability to penetrate the DVE and acquire the lights.

Thus, the basic concept is to take advantage of the ready capability of solid-state sources (such as LEDs) to be pulsed. By means of GPS-derived clocking, such pulses are synchronized with the camera frame rate. Each pair of camera frames is captured with the relevant lights pulsed “on” and “off” respectively, followed by a subtraction operation in the video processor. The result is that only the desired lights are retained, and all other scene illumination is canceled down to the system noise level. This engineering principle is known by such terms as “synchronous” or “coherent” detection, and results in a large signal-to-noise advantage (Ref. 1). Synchronization timing signals for the target-lights pulsing and the camera frame are derived from very inexpensive GPS receivers on the ground and the aircraft respectively.

(The light-pulsing and camera frame rates that we use will actually be faster than standard video rates, with a final presentation to the display, and possible nav computer, at standard video rates. This enhances performance in several ways, and assures that the lights will not have a discernible “flicker” when used conventionally by the pilot’s eyes.)

If the airfield or LZ has already upgraded to LED lighting, the only infrastructure action required for our system is a modification of the power supply pulsing. In the event that LED installation is part of the changeover, the LEDs are designed to utilize existing power distribution systems in order to minimize the cost of the installation.

- U. S. patent was granted to Astronics Max-Viz, Inc. on April 27, 2010, followed by international patents. Given a suitable level of standardization for the concept, our FAA colleagues envision a regulatory expansion-factor vs the real-time, conventional RVR at a landing field; this “RVR multiplier” will apply to appropriately equipped aircraft and ground facilities, and has been dubbed the “Instrument Qualified Visual Range” (IQVR).

1.2 Derivation of a Real-Time Nav-to-Land Signal.

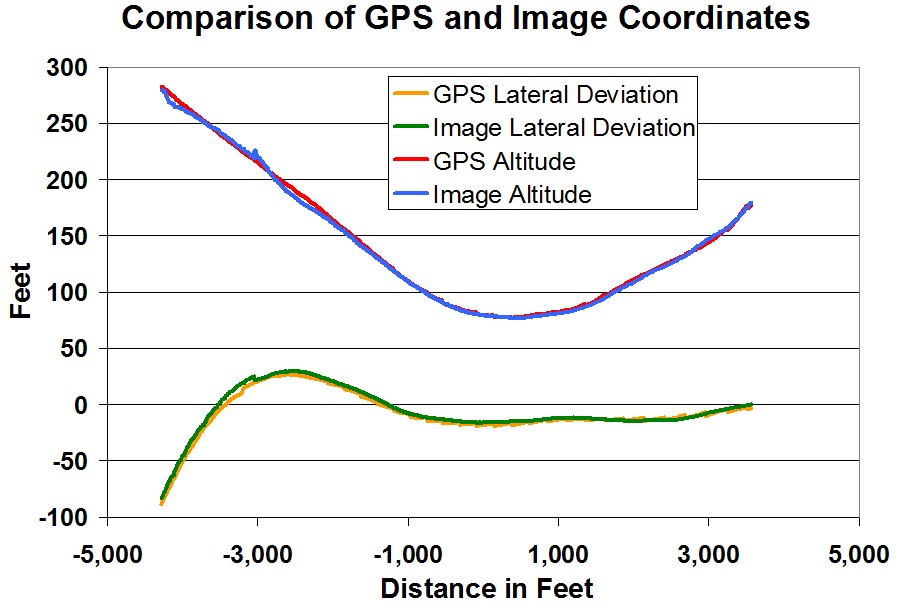

Four or more lights outlining a rectangular runway or LZ (or placed evenly at points on a circular LZ) will be imaged by the IQVR system as a perspective-generated trapezoid. The geometrical shape and size of this four-point figure is sufficient to derive the altitude, heading, and distance from the runway/LZ. This local navigation signal may also be compared with an on-board nav system and database/synthetic vision system (SVS) to provide autonomous verification of position and hence the actual SVS display.

Consider for example a square landing pad acquired through the fog; the image will look like this:

Right lateral deviation Low-angle approach, straight-in

The following actions are enabled:

- The 3-dimensional position of the helicopter with respect to the pad is calculated from our “separate thread” that is not dependent upon – and can be continuously compared to – the GPS/nav system output (thus constituting an autonomous, “virtual ILS”)

- The system monitors database integrity and annunciates fixed and transient hazards when sensed

- Correct registration of an SVS display vs the EVS imagery is assured

- Guidance symbology may be generated, or the autonomous nav signal may be coupled to the landing system

We have very successfully demonstrated this principle on a U.S. Air Force Program (“SE Vision”), in conjunction with our partners from the German Aerospace Laboratories (DLR). Runway acquisition occurred at a large approach angle and was essentially instantaneous once the landing field was within the camera’s field of view. A comparison of the image-derived lateral and vertical landing-approach deviations with those indicated by the GPS-nav system is shown below for a missed approach (go-around) in a transport aircraft flight test:

Regulatory approval of the substantive applications of this autonomous navigation signal will need to evolve. As in many such innovations, the earliest adoption may be expected through regulatory “Variances” for rotary-wing operations.

WHERE IT APPLIES.

Based on the award of exclusive rights to a concept conceived by Dr. Kerr and patented in his name while employed at Astronics Max-Viz, Inc. (U.S. Patent No. 7,705,879), Kerr Avionics LLC is developing this unique new, software-based technology in the field of Enhanced Vision Systems for fixed and rotary wing pilotage. The concept was initially developed during a cooperative activity with an FAA researcher and was named Instrument Qualified Visual Range or “IQVR”.

The product is a program that greatly enhances the performance of existing Enhanced Vision Systems and can work with virtually any digital visible light camera installed on an aircraft. Applicable to currently certified “Enhanced Vision Systems” (EVS), our software uses minor modifications to ground-based LED lights, along with GPS-derived synchronization of an on-board video camera, and an off-the-shelf video processing unit with the software (the processing may stand alone or be integrated into the FMS* or another cockpit computer). The system uniquely accomplishes the following:

- In fixed-wing operations, increases the range of current IR-based EVS sensors by between 25 and 50% during fog and other DVE (Decreased Visual Environment) flight and ground-taxi operations.

- In rotary-wing operations, differentiates between the desired, “target” lights, and confusing clutter (other lights, and daytime/nighttime background features) for unequivocal, early visual Landing Zone (LZ) acquisition.

- For Unmanned Aerial Systems (UAS/Drones), IQVR enables street address specific landing and location identification as well as occupant status signaling during disaster search and rescue events

There exists a compelling need for these capabilities in both fixed and rotary wing operations.

For fixed-wing aircraft in instrument flight environments, the Kerr Avionics IQVR image processing software can dramatically increase the range of multi-spectral EVS and reduce (and potentially virtually eliminate) the occurrence of missed approaches due to weather. We need only to prove the performance enhancements with specific data, and at least one major OEM will partner to fund our home-stretch to production software.

For rotary-wing aircraft, the Kerr Avionics IQVR image processing will also provide a significant function to emergency first responders and life flight operators at night by enabling the pilot to declutter light on the ground and only see the discrete landing zone lights. For offshore platforms, IQVR will “cut through the murk” and show an early outline of the LZ.

For Unmanned Aerial Systems (UAS/Drones), IQVR enables street address specific landing and location identification as well as occupant status signaling during disaster search and rescue events.

For Climate Change, a significant decrease in the total yearly carbon footprint of all aviation activities due to vastly improved airspace traffic flow, and the virtual elimination of airlines flight deviations due to bad visibility/weather.

UAS (UNMANNED AERIAL SYSTEMS) SEARCH & RESCUE APPLICATION.

UAS/Drone technology and applications/operations are becoming a huge industry. This nascent technology offers a stunning opportunity in practical applications that show immediate results and ROI benefits across a broad spectrum of public and private issues.

In the public sector, for example, UAS/ Drones promise to fulfill search & rescue missions, and disaster and urban planning survey tasks more quickly and accurately than possibly imagined just five years ago. For every task where a drone replaces a surface vehicle, the roads become less congested, the air less polluted and the service and data delivered and collected more quickly and efficiently. Emergency event managers are more quickly and accurately informed and so can triage quickly and allocate resources properly- prioritized in a timely manner.

Cost-intensive manned aerial searches and surveys become cost-effective and exponentially more efficient with multiple sectors searched and surveyed more accurately by UAS/Drones- in the same period of time and at the fraction of the cost of just one manned aircraft, covering just one sector.

And, the potential for extremely accurate and detailed data from an Unmanned drone search/survey compared to a manned aerial survey exists IF Drones are written into a holistic plan designed to take full advantage of their exponential efficiency and economy.

The keystones of such a “Holistic Plan” are in place today and need only be connected to pressing needs of Statewide disaster planning and urban congestion currently under review by city planners.

- SYNCHRONOUS DETECTION.

Synchronous Detection is image processing software that enables onboard Drone cameras to recognize -cooperative LED lights on the ground. Many COTS (Commercially Available Off-The-Shelf) LED flashlights may be modified with a button insert between the circuit and the ground side of the battery that encodes the light to be recognized as “special” by the drone camera.

Synchronized LED lights can be detected in a sea of “cultural lighting”, as in dense urban environments or in bright daylight, when even the human eye cannot see the LED light. The software “subtracts” all light energy falling on the camera that is not coded leaving only the target light visible.

Basic data can be encoded in the modified “ LED flashlights” so the operator can select, for example, “Injured/Need Medical Assistance”,” Need Food/Water”, “OK for Now”, and “Evacuate Us-Urgent”.

- State Government Emergency Management.

Now imagine that each household or occupied building in the State has an emergency packet with its own discrete “tuned” LED light, serialized and registered, for deployment in the immediate aftermath of a catastrophic natural disaster such as a major earthquake and/or massive flooding.

A similar application for search and rescue of hikers lost in the wilderness—a regular occurrence in the Cascades would reap similar human and economic benefits.

This system works in concert with and as a backup for GPS guidance. Compromise of the GPS constellation either by jamming or natural EMP events in near-earth space would be devastating to Drone operations (and manned operations for that matter) without this backup.

- Commercial Applications.

For both -manned and unmanned aerial vehicles, Coded LED lights replace the Landing Zone lighting on Helipads or serve as a discrete “addressed” landing zone at any street address for emergency response or delivery purposes.

First responders at traffic or other emergency sites can quickly layout a four-light mobile landing zone for Life Flight Medical Evac Helicopters.

How the entrepreneurs commercialize this ability to arrive unambiguously at a point will revolutionize the delivery of goods and services, significantly reduce ground vehicle clutter and pollution and build a tighter interactive connection –not just in cyberspace for data transfer, but in the physical world for transfer of goods and services, with minimal if any measurable impact of the surface transportation infrastructure.

Synchronous Detection for aerial navigation and aircraft guidance is a Patented technology, currently under development for use in Commercial and Business Aviation, both fixed and rotor-wing aircraft. Airports around the world are converting to LED lighting to reduce power consumption and cost.

The overarching benefit of Synchronous Detection in this aviation segment is the enhanced penetration of atmospheric obscurants like Fog, Smoke, and heavy mist/rain and dust by pulsed LED lighting, Theoretical models show a 50% or greater penetration of typical obscurants by pulsed Runway /Landing Zone lighting systems over conventional incandescent or non-pulsed LEDs.

Implementation of this software in the highly regulated segment of manned aircraft will virtually eliminate missed approaches and airline deviations and delays due to poor visibility weather.